Agent Monitoring - Live

A powerful framework for evaluating and monitoring AI agent performance in near-real-time by systematically analyzing interactions through the lens of question intent, contextual relevance, and ground truth alignment.

Detect Issues Before They Escalate

Behavioral Analysis

Detect subtle shifts in agent behavior—declining accuracy, increased hallucination rates, or context drift—before they escalate into systemic failures.

Ground Truth Alignment

Benchmark responses against verified truths to pinpoint when an agent begins generating plausible-sounding but incorrect or fabricated information.

Trend Tracking

Track performance trends over time, flagging when an agent degrades or when updates introduce regressions in reasoning quality.

Continuous Evaluation Loop

Early Intervention

Empowers developers and product teams to intervene early, retrain models, or fine-tune prompts with precision before issues impact users.

Transparency & Trust

Provides explainable metrics and annotated feedback, making it easier to communicate AI reliability to stakeholders and build confidence.

Context-Aware Intelligence

Ensures AI agents remain accurate, context-aware, and aligned with user expectations through systematic contextual relevance analysis.

A Strategic Safeguard for Responsible AI Deployment

In an era where AI agents are increasingly embedded in critical workflows—from healthcare to finance—RagMetrics acts as a safeguard, ensuring that these systems remain accurate, context-aware, and aligned with user expectations.

It's not just a QA tool—it's a strategic layer of intelligence for responsible AI deployment.

Easy Integration with No-Code Platforms

RagMetrics seamlessly integrates with popular no-code automation platforms like n8n and Zapier, enabling teams to build powerful monitoring workflows without writing a single line of code.

Automated Workflows

Set up automated monitoring pipelines that trigger alerts, generate reports, and route insights to your team's communication channels whenever quality thresholds are breached.

Rapid Deployment

Connect RagMetrics to your existing tech stack in minutes using pre-built integrations, allowing non-technical teams to implement sophisticated AI monitoring solutions effortlessly.

Whether you're routing evaluation results to Slack, updating dashboards in real-time, or triggering model retraining workflows, RagMetrics' flexible API and webhook support make it easy to integrate monitoring into your existing operations.

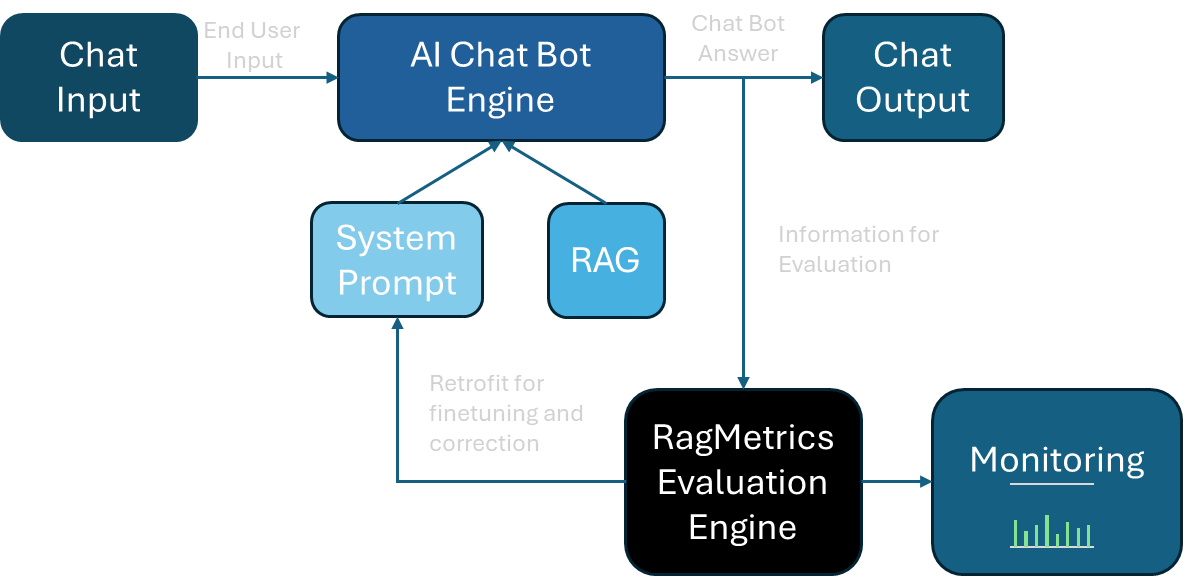

Example: Live Chat Evaluation

RagMetrics continuously evaluates live chat interactions in near real-time, capturing information from both user inputs and bot responses to assess quality, hallucinations, and contextual relevance. The evaluation can be retrofited to modify the prompt to get better answers. The evaluation engine provides actionable insights for monitoring performance trends and enables a feedback loop for system prompt refinement and model finetuning, ensuring your AI chat bot maintains optimal performance over time.