Evaluate GenAI

Comprehensive evaluation framework to test, measure, and improve your AI models with precision and confidence.

Evaluation Workflow

Evaluation Data

Bring your own Data or use our Synthetic Data Generator

Define Prompts

Compare Foundational Models or Prompts

Choose Metrics

Select from over 200+ criteria or define your own

Evaluate

Run Retrieval or Generation testing of your RAG or GenAI tools

Analyze Results

Review results and fine tune your system

Evaluation Data

Bring your own Data or use our Synthetic Data Generator

Define Prompts

Compare Foundational Models or Prompts

Choose Metrics

Select from over 200+ criteria or define your own

Evaluate

Run Retrieval or Generation testing of your RAG or GenAI tools

Analyze Results

Review results and fine tune your system

Select your Evaluation Environment

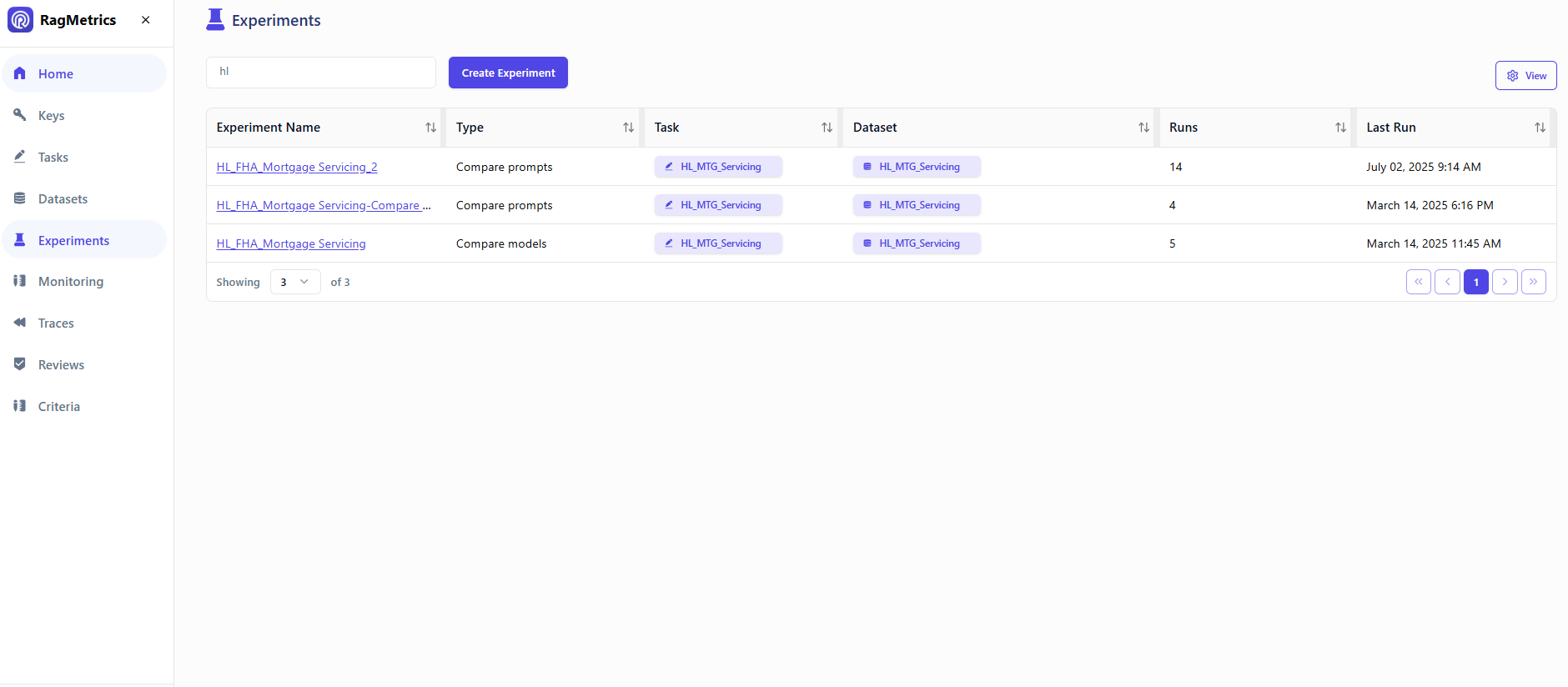

RagMetrics GUI

Run end-to-end evaluations on our GUI. From Synthetic Data Generation to reviewing results.

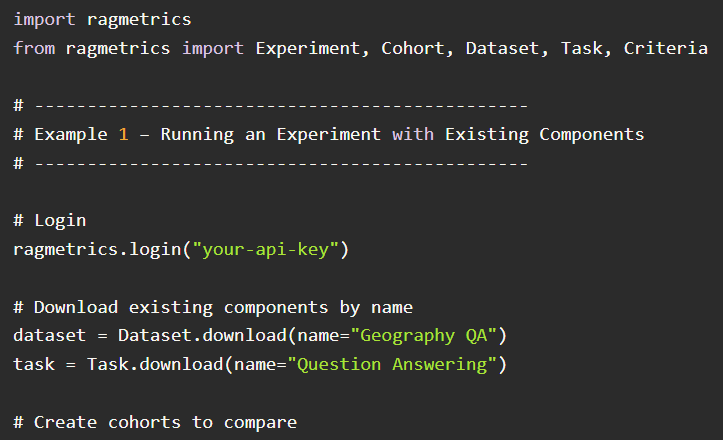

API

Integrate the evaluation process in your pipeline using RagMetrics API.

Automated Testing

Run automated evaluation suites across your AI models to identify performance issues before deployment.

Custom Metrics

Define and track custom evaluation metrics that matter most to your specific use case and business needs.

Real-Time Insights

Get instant feedback on model performance with real-time dashboards and detailed analytics.

Comparative Analysis

Compare different models, versions, and configurations side-by-side to make informed decisions.