Live AI Evaluation

Real-time monitoring and scoring mechanism integrated directly into your LLM pipeline. Monitor, measure, and improve your generative AI outputs with confidence as they happen in production.

Traditional offline benchmarks often fail to capture the dynamic nature of user interactions. RagMetrics Live AI Evaluations enable continuous assessment as your model generates responses, ensuring quality metrics are always up to date.

Instant Judgements

Get real-time quality assessments for every LLM response.

Build Around

Build your own solution around Live AI Evaluation and implement your own judging pipeline.

Scalable API

Simple REST API that scales from prototypes to production with thousands of evaluations.

How It Works

Pipeline Connection

Connect your LLM pipeline to RagMetrics using the Live AI Evaluation API.

Evaluation Criteria

Apply customizable metrics including accuracy, relevance, coherence, and hallucination among over 200 metrics.

Real-Time Scoring

Scores are assigned instantly as AI generates responses, available through GUI dashboard or API calls.

Feedback Loop

Poor outputs can be flagged and improvements applied quickly to refine prompts and models.

Flexible and Powerful Evaluations

Multi-Criteria Evaluations

Evaluate AI responses across multiple dimensions.

Enable Continuous Monitoring

Set up automated monitoring workflows that run 24/7 to ensure your AI system maintains high quality standards.

Multiple Evaluation Types

Evaluate what you need. Conversational dialogs can skip the evaluation process.

Flexible Configuration

Customize evaluation parameters, scoring models and integration settings to match your specific requirements.

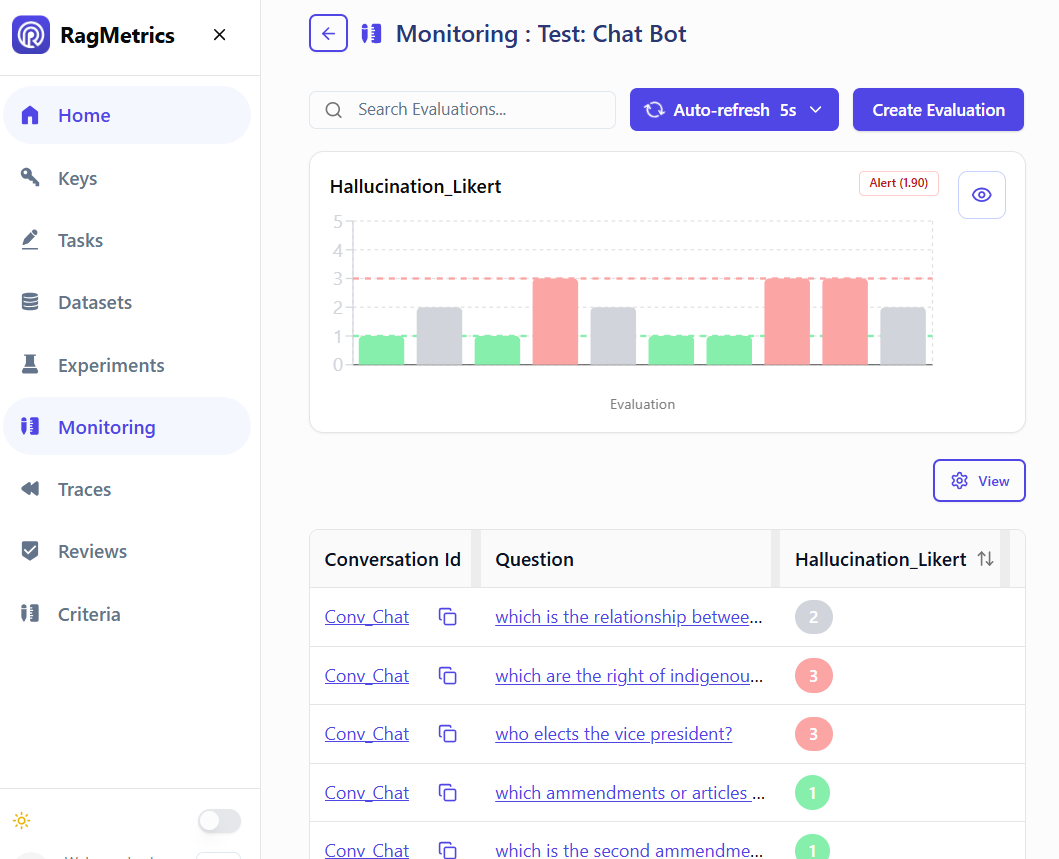

Monitoring Console

Real-time monitoring console that shows how the evaluations are being scored, raising indicators when they are out of range or present a problem.

Key Benefits

Real-Time Reliability

Gain confidence that your AI is performing well in production, not just in controlled tests.

Scalability

Apply evaluations across thousands of outputs without manual intervention.

Transparency

Dashboards provide clear visibility into model performance, making it easier to communicate results to stakeholders.

Customization

Tailor metrics to specific business needs, whether customer support accuracy, content safety, or recommendation relevance.

Rapid Iteration

Continuous feedback allows teams to iterate faster, reducing the time between identifying issues and deploying fixes.

Alerts

Use the Monitoring Console to configure the alerts levels for each of the criteria selected.

Real-World Use Cases

Customer Support Bots

Ensure responses are helpful, factual, and aligned with brand tone for consistent customer experiences.

Knowledge Retrieval Systems

Validate that retrieved documents are relevant and correctly integrated into AI-generated answers.

Enterprise AI Pipelines

Provide executives with confidence that AI-driven decisions are based on reliable, high-quality outputs.